The Problem

As part of Craneware’s broader initiative to migrate its desktop-based healthcare financial management products to cloud-based applications, the Trisus Reference product team needed to understand which workflows users relied on most and how the cloud experience could improve clarity, speed, and confidence without disrupting trusted processes.

Trisus Reference functions as a foundational system of record for codes, mappings, and reference data used across multiple downstream financial, billing, and compliance workflows. Because errors or confusion at the reference level can cascade into significant operational and regulatory risk, any redesign or migration required a deep understanding of how users reviewed, validated, and trusted the data.

Many participants had 5–10+ years of experience using Trisus Reference and interacted with it daily or near-daily. Their work involved reviewing and maintaining large volumes of reference data under strict regulatory and audit requirements. These users valued accuracy, traceability, and predictability, and were cautious of change unless it clearly reduced risk or effort.

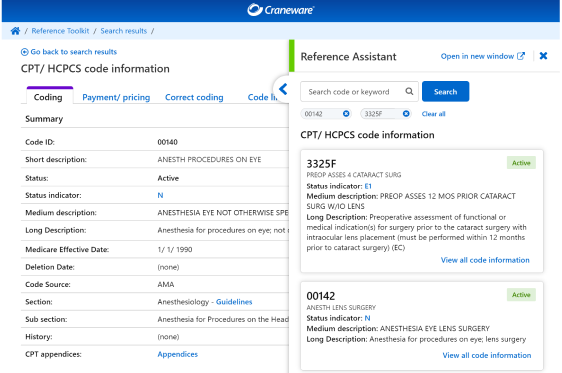

Trisus Reference with Reference Assistant View

The Plan

I designed a multi-method qualitative research strategy to capture not only what users needed from a cloud-based Trisus Reference, but also how they thought about reference data, risk, and review work. The intent was to ensure that the cloud experience aligned with existing mental models while addressing pain points around visibility, prioritization, and confidence.

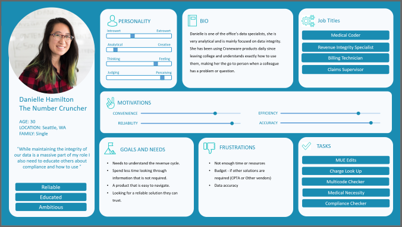

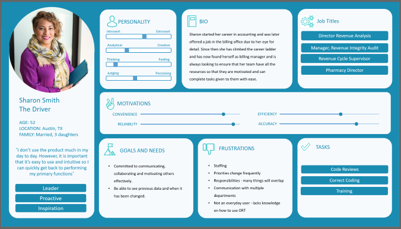

Example personas

Research Methodology

Created personas based on survey responses, usage patterns, and role characteristics

Distributed qualitative survey to high-usage Trisus Reference users fitting the various personas to ensure proportional representation

Moderated focus groups with survey respondents to explore workflows, trust signals, and validation behavior

Conducted card sorting exercise during focus groups to understand how users organized reference data and tasks

Collected and analyzed survey and persona insights used to shape focus group discussion guides and card sort structure

During focus groups, participants completed a moderated card sorting exercise using cards representing:

Reference data types (codes, mappings, rule sets, updates)

User actions (review, validate, approve, investigate, export)

System signals (changes, errors, conflicts, downstream impact)

Participants consistently grouped cards by task and intent, rather than by internal system structure. Review- and validation-oriented items clustered together, while maintenance tasks were treated as a separate mental category.

The Results

Key Findings

Strong need for transparency and traceability

Users relied on Trisus Reference to find changes, but often lacked clarity around why a value changed.Desire for simpler, at-a-glance validation

Across surveys, focus groups, personas, and card sorting, users consistently wanted faster answers to:What changed since my last review?

What requires attention right now?

What is high-risk versus routine for review?

Mental models favor task-based organization

Persona differences reinforced that users approached the product through review and validation tasks.

Expectation for modern, review-oriented dashboards

Users associated visual hierarchy and summaries with reduced error risk and increased confidence.

These findings helped shape early cloud design direction by emphasizing transparency, reduced cognitive load, and dashboard-driven workflows aligned with real-world usage patterns.

Customer satisfaction with the next update to the cloud software package incorporating these changes increased 16 percentage points.